The Xbox One vs. PlayStation 4 graphics spec comparison is stark to say the least. Both systems utilise AMD's GCN (Graphics Core Next) architecture, but Sony's rendering tech has 50 per cent more raw computational power than the Xbox One equivalent - and that's factoring out other differences between the systems. The question is, what is the impact in actual gameplay conditions?

Behind the scenes, developers have suggested to us that we shouldn't jump to conclusions about the extent of the PlayStation 4's superiority, and that the 50 per cent boost in GPU power emphatically won't result in a likewise boost to in-game performance. It's a topic we raised briefly with PS4 chief architect Mark Cerny when we met up with him a couple of weeks ago:

"The point is the hardware is intentionally not 100 per cent round," Cerny revealed. "It has a little bit more ALU in it than it would if you were thinking strictly about graphics. As a result of that you have an opportunity, you could say an incentivisation, to use that ALU for GPGPU."

An interpretation of Cerny's comment - and one that has been presented to us by Microsoft insiders - is that based on the way that AMD graphics tech is being utilised right now in gaming, a law of diminishing returns kicks in.

We decided to put the major issues to the test by utilising equivalent PC hardware based on the same AMD architecture as the next-gen consoles. On the one hand, this has its disadvantages - the closed box environment of the consoles promises significant boosts over PC, while each machine uses its own graphics API which, presumably, introduces another performance differential. On the other hand, we know that next-gen launch games are developed initially on PC, with ports currently in progress for the new consoles. Also, this is an "In Theory" exercise, so commonality in the API at least means that the graphics tech for both test platforms is being tested on a like-for-like basis - and above all, this is a hardware experiment.

There's another compelling reason for the testing too: when it came to designing the next-gen consoles, the majority of the available performance data available to Microsoft, Sony and AMD would have been derived from how the GCN hardware is utilised in game engines running on PC. The shaping of the GCN architecture and its predecessors would have been highly informed by their performance running actual games. We suspect that the results would have influenced decisions elsewhere in the design of the consoles - for example, in Sony's decision to pour resources into GPU compute.

To begin with, let's take a look at the choices we made for our target platforms. Let's be clear here - our objective here is not to create complete PC replicas of the consoles - it simply isn't possible. Our focus is the differential in graphics performance based on the available GPU specs. To achieve this, we wanted to ensure (as much as possible) that the rendering wouldn't be CPU or memory limited, so we utilised our existing PC test-bed, featuring a Core i7 3770K overclocked to 4.3GHz and 16GB of DDR3 memory running at 1600MHz.

Choosing the right graphics hardware would be somewhat more challenging. On the face of it, Microsoft's GPU sounds remarkably similar to AMD's Bonaire design, as found in the Radeon HD 7790, while Sony's choice is uncannily like the Pitcairn product, the closest equivalent to the PS4's graphics chip being the desktop Radeon HD 7870, or closer still in terms of clock-speed, the laptop Radeon HD 7970M. In all cases, the console products run at lower clocks, and also have two fewer compute units - we suspect that these units are indeed present in the console processors but are disabled in order to increase the number of chips that the platform holders can use from the production line - the yield. Should a chip be fabricated with a defect in the graphics area, the foundry can simply disable the affected compute units and still use the processor. To ensure parity between all consoles, two units would be disabled on all chips regardless - similar to the way in which one of the SPUs on the PlayStation 3's Cell is inactive.

Disabling compute units would be useful for Microsoft and Sony's production runs, but not for our target hardware which has no direct equivalents in the PC space as a consequence. So, we chose the Radeon HD 7850 for our "target Xbox One" (16 compute units vs. 12 in the XO hardware) and the Radeon HD 7870 XT as our PS4 surrogate (24 compute units vs. 18 in the Sony console). In this way we maintain the relative performance differential between the two consoles - just like the PS4, our target version has 50 per cent more raw GPU power. Clearly though, our kit is still way more powerful than the consoles, so to equalise performance, we drop the clock-speed on both all the way down to 600MHz. Now, some games achieve better performance with higher clocks, while others benefit with more cores, but at least with this compromise we have the right levels of compute power, not to mention the correct ratio of bandwidth per flop to provide parity with PS4.

Choice of memory speed is the next big question. On both platforms we equalise RAM speed at 1375MHz, to match PlayStation 4 (this involves downclocking the 7870 XT, upclocking the 7850). This potentially gives our XO surrogate an advantage the actual console may not have owing to its slower DDR3 memory and fast ESRAM cache. On the flipside it does mean we can address the graphics hardware itself on equal ground - and bandwidth is something we'll go into more depth on later.

Benchmarking the target hardware

So the question is, how does AMD profile its own hardware and adjust its designs accordingly? Clearly part of the process will involve using existing game engines, so that's the approach we'll take, kicking off with our usual range of benchmarks. In order to get as close to next-gen workloads as possible, we've been a little more selective in our choice of settings. Clearly, 1080p is the target resolution for the next-gen consoles, so that is a singular focus in our like-for-like testing. Multi-sampling anti-aliasing (MSAA) is off the table as its usage in next-gen titles we've seen thus far has been close to non-existent.

HD 7850 (600MHz), Target Xbox One Platform HD 7870 XT (600MHz), Target PS4 Platform Game Engine Performance Differential

BioShock Infinite, DX11 Ultra DDOF 31.8fps 38.2fps 20.4%

BioShock Infinite, DX11 40.5fps 49.5fps 22.7%

Tomb Raider, Ultimate (TressFX) 22.4fps 29.8fps 33%

Tomb Raider, Ultra 39.5fps 50.3fps 27.3%

Tomb Raider, High 56.8fps 69.6fps 22.5%

Hitman Absolution, Ultra 37.2fps 45.2fps 21.7%

Hitman Absolution, High 44.1fps 52.6fps 19.3%

Sleeping Dogs, Extreme 20.3fps 26.2fps 29%

Sleeping Dogs, High 40.2fps 50.9fps 26.6%

Metro: Last Light, High 25.5fps 30.0fps 17.6%

The results pretty much confirm the theory that more compute cores in the GCN architecture doesn't result in a linear scaling of performance. That's why AMD tends to increase core clock and memory speed on its higher-end cards, because it's clear that available core count own won't do the job alone. Looking at the results, Metro: Last Light shows the least difference - the extra 50 per cent of compute power producing just 17.6 per cent more performance. On the flipside, Tomb Raider hands in the most noticeable gains - up to 33 per cent once the insane TressFX hair simulation is engaged, but with a handsome boost to performance across a brace of additional quality settings even without it.

Game focus: Crysis 3

So the next question is to what extent multi-platform developers may need to compromise on their Xbox One versions in order to match PlayStation 4. There are numerous routes open here. Virtually all next-gen multi-platform titles will have PC versions, therefore the engines are already built with scalability in mind - to the point where the lowest common denominator won't hold a candle to the performance of the Xbox One, let alone the PS4. The tech teams can easily dial back quality settings in order to claw back performance - be it for the Microsoft console or a much less powerful PC - or they can maintain parity between XO and PS4 in terms of features and simply scale back resolution. We decided to put this to the test by utilising the closest thing we have to a next-gen game - the forward-looking, technologically brilliant Crysis 3. Most of the game benchmarks above (Metro: Last Light aside) are based on scaled up console games. What makes Crysis 3 different is that it was built for PC primarily, then scaled down to run on current-gen console - exactly like many of the games we'll be playing in Q4 this year.

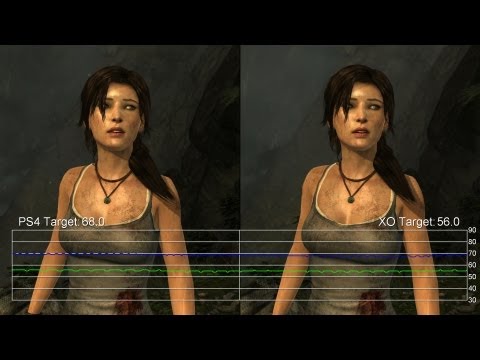

In this video we compare performance of our "target" hardware running at 1080p, then scale down the Xbox One equivalent first to 1776x1000 (a resolution drop of 17.2 per cent). After that, we scale down again to 1600x900 - a drop of 33.3 per cent, and a linear downscale in line with the GPU power deficit that Xbox One has in comparison to the PlayStation 4. So in theory we should see the former sit between the two target platforms, and the latter bringing our XO target into line with our PS4 surrogate. However, if you look at the video, this isn't what happens.

Dropping to 1600x900 actually sees the target Xbox One set-up move significantly ahead of the PS4 equivalent running at 1080p, while the 1776x1000 stream lags just a touch behind - take a look further down the page to see the impact this downscaling makes to image quality. Also, we find that despite having a 50 per cent boost in computational power, average frame-rates on our PS4 equivalent are only 19.3 per cent ahead of our "Xbox One" (42.6fps vs. 35.7fps). It may well be the case that system resources consumed by the advanced shader work don't scale in a linear manner according to resolution, meaning that fewer pixels to service frees up more system resources than you might think. We don't know for sure, and clearly this is just one game - as the benchmarks above demonstrate, different titles on different quality settings will see different results. Another factor to bear in mind is the 30fps frame-rate cap on most console titles - it's a great performance leveller and generally speaking, it tends to favour the weaker platform, which is only unduly affected on the most technologically demanding scenes.

But of course, PlayStation 4's strengths aren't just limited to its surfeit of graphics processing power - there's its bandwidth advantage too. It has a 256-bit memory bus allowing for a peak throughput of 176GB/s from its GDDR5. In contrast, Xbox One's DDR3 memory is limited to 68GB/s, with the slack taken up by its 32MB of embedded static RAM (ESRAM) built into the processor with peak theoretical bandwidth of 192GB/s.

The bandwidth question

Even if the additional rendering power of the PS4's graphics chip isn't quite as pronounced as the raw numbers suggest, lack of bandwidth can be an absolute killer to performance - and that's a big worry for the Xbox One architecture. You can see this by checking out the following video, where we compare the Radeon HD 7790 with the Radeon HD 7850. What's interesting about this comparison is that while the HD 7790 has fewer compute units than the HD 7850 (14 vs. 16), it's clocked higher, to the point where the raw compute potential of both is virtually identical at around 1.8 teraflops - PS4 territory. The difference comes down to bandwidth. The 256-bit memory bus of the HD 7850 offers a massive 60 per cent advantage over the 128-bit HD 7790 interface.

Three games are covered here: Crysis 3, Skyrim and Battlefield 3. The first two games reveal anything up to a 20 per cent performance improvement owing to the additional bandwidth available, but DICE's BF3 reflects the parity in compute power, offering virtually identical performance, suggesting that sans MSAA, the tech isn't hugely reliant on bandwidth. Transplanting those findings across to the next-gen consoles, developers for the Microsoft console have their work cut-out in utilising the DDR3 and ESRAM effectively in matching the sheer throughput of the PS4's memory bus. Getting good performance from the ESRAM is key in ensuring that Xbox One is competitive with the PS4.

Conclusion: the PS4 advantage and the Xbox One challenge

In summary, the PS4 enjoys two key strengths over the Xbox One in terms of its rendering prowess: raw GPU power and masses of bandwidth. On the face of it, the specs look like a wash, but it seems clear that one of those advantages - the 50 per cent increase in compute power - doesn't result in the stratospheric boost to performance you might imagine. Clearly the PS4 is more powerful, but the evidence suggests that quality tweaks and/or resolution changes could help produce level frame-rates on both platforms running the same games. Bandwidth remains the big issue - the PS4's 256-bit bus is established technology, and easy to utilise. Xbox One's ESRAM is the big unknown, specifically in terms of how fast it actually is and the speed at which developers are able to make the most of it. In our benchmarks and game testing we gave the Xbox One target the benefit of the doubt by equalising bandwidth levels, but clearly this is in no way guaranteed.

Clearly, the next-gen battle is shaping up to be a fascinating contest. What we're looking at are two consoles designed from the same building blocks but with two entirely different approaches in mind. According to inside sources at Microsoft, the focus with Xbox One was to extract as much performance as possible from the graphics chip's ALUs. It may well be the case that 12 compute units was chosen as the most balanced set-up to match the Jaguar CPU architecture. Our source says that the make-up of the Xbox One's bespoke audio and "data move engine" tech is derived from profiling the most advanced Xbox 360 games, with their designs implemented in order to address the most common bottlenecks. In contrast, despite its undoubted advantages - especially in terms of raw power, PlayStation 4 looks a little unbalanced by comparison. And perhaps that's why the Sony team led by Mark Cerny set about redesigning and beefing up the GPU compute pipeline - they would have seen the unused ALU resources and realised that there was an opportunity here to transform that into an opportunity for developers to do something different with the graphics hardware.

Cerny himself admits that utilisation of GPU compute isn't likely to come into its own until year three or year four of the PS4's lifecycle. One development source working directly with the hardware, told us that "GPU compute is the new SPU" in reference to the difficulty coders had in accessing the power of the PS3's Cell processor, but also in terms of the potential of the hardware. There's a sense that this is uncharted territory, that it's an aspect of the graphics tech that's going to give the system a long tail in terms of extracting its full potential. But equally, this isn't going to happen overnight and almost certainly not in the launch period. That being the case, as unlikely as this may sound bearing in mind the computational deficit in its graphics hardware, theoretically Xbox One multi-platform games have a pretty good shout in getting close to their PS4 equivalents, with only minor compromises. Further into the lifecycle it becomes a question of whether PS4 GPU compute becomes a significant factor in development when the additional effort would not yield much in the way of dividends for the Xbox One version of the game.

Behind the scenes, developers have suggested to us that we shouldn't jump to conclusions about the extent of the PlayStation 4's superiority, and that the 50 per cent boost in GPU power emphatically won't result in a likewise boost to in-game performance. It's a topic we raised briefly with PS4 chief architect Mark Cerny when we met up with him a couple of weeks ago:

"The point is the hardware is intentionally not 100 per cent round," Cerny revealed. "It has a little bit more ALU in it than it would if you were thinking strictly about graphics. As a result of that you have an opportunity, you could say an incentivisation, to use that ALU for GPGPU."

An interpretation of Cerny's comment - and one that has been presented to us by Microsoft insiders - is that based on the way that AMD graphics tech is being utilised right now in gaming, a law of diminishing returns kicks in.

We decided to put the major issues to the test by utilising equivalent PC hardware based on the same AMD architecture as the next-gen consoles. On the one hand, this has its disadvantages - the closed box environment of the consoles promises significant boosts over PC, while each machine uses its own graphics API which, presumably, introduces another performance differential. On the other hand, we know that next-gen launch games are developed initially on PC, with ports currently in progress for the new consoles. Also, this is an "In Theory" exercise, so commonality in the API at least means that the graphics tech for both test platforms is being tested on a like-for-like basis - and above all, this is a hardware experiment.

There's another compelling reason for the testing too: when it came to designing the next-gen consoles, the majority of the available performance data available to Microsoft, Sony and AMD would have been derived from how the GCN hardware is utilised in game engines running on PC. The shaping of the GCN architecture and its predecessors would have been highly informed by their performance running actual games. We suspect that the results would have influenced decisions elsewhere in the design of the consoles - for example, in Sony's decision to pour resources into GPU compute.

To begin with, let's take a look at the choices we made for our target platforms. Let's be clear here - our objective here is not to create complete PC replicas of the consoles - it simply isn't possible. Our focus is the differential in graphics performance based on the available GPU specs. To achieve this, we wanted to ensure (as much as possible) that the rendering wouldn't be CPU or memory limited, so we utilised our existing PC test-bed, featuring a Core i7 3770K overclocked to 4.3GHz and 16GB of DDR3 memory running at 1600MHz.

Choosing the right graphics hardware would be somewhat more challenging. On the face of it, Microsoft's GPU sounds remarkably similar to AMD's Bonaire design, as found in the Radeon HD 7790, while Sony's choice is uncannily like the Pitcairn product, the closest equivalent to the PS4's graphics chip being the desktop Radeon HD 7870, or closer still in terms of clock-speed, the laptop Radeon HD 7970M. In all cases, the console products run at lower clocks, and also have two fewer compute units - we suspect that these units are indeed present in the console processors but are disabled in order to increase the number of chips that the platform holders can use from the production line - the yield. Should a chip be fabricated with a defect in the graphics area, the foundry can simply disable the affected compute units and still use the processor. To ensure parity between all consoles, two units would be disabled on all chips regardless - similar to the way in which one of the SPUs on the PlayStation 3's Cell is inactive.

Disabling compute units would be useful for Microsoft and Sony's production runs, but not for our target hardware which has no direct equivalents in the PC space as a consequence. So, we chose the Radeon HD 7850 for our "target Xbox One" (16 compute units vs. 12 in the XO hardware) and the Radeon HD 7870 XT as our PS4 surrogate (24 compute units vs. 18 in the Sony console). In this way we maintain the relative performance differential between the two consoles - just like the PS4, our target version has 50 per cent more raw GPU power. Clearly though, our kit is still way more powerful than the consoles, so to equalise performance, we drop the clock-speed on both all the way down to 600MHz. Now, some games achieve better performance with higher clocks, while others benefit with more cores, but at least with this compromise we have the right levels of compute power, not to mention the correct ratio of bandwidth per flop to provide parity with PS4.

Choice of memory speed is the next big question. On both platforms we equalise RAM speed at 1375MHz, to match PlayStation 4 (this involves downclocking the 7870 XT, upclocking the 7850). This potentially gives our XO surrogate an advantage the actual console may not have owing to its slower DDR3 memory and fast ESRAM cache. On the flipside it does mean we can address the graphics hardware itself on equal ground - and bandwidth is something we'll go into more depth on later.

Benchmarking the target hardware

So the question is, how does AMD profile its own hardware and adjust its designs accordingly? Clearly part of the process will involve using existing game engines, so that's the approach we'll take, kicking off with our usual range of benchmarks. In order to get as close to next-gen workloads as possible, we've been a little more selective in our choice of settings. Clearly, 1080p is the target resolution for the next-gen consoles, so that is a singular focus in our like-for-like testing. Multi-sampling anti-aliasing (MSAA) is off the table as its usage in next-gen titles we've seen thus far has been close to non-existent.

HD 7850 (600MHz), Target Xbox One Platform HD 7870 XT (600MHz), Target PS4 Platform Game Engine Performance Differential

BioShock Infinite, DX11 Ultra DDOF 31.8fps 38.2fps 20.4%

BioShock Infinite, DX11 40.5fps 49.5fps 22.7%

Tomb Raider, Ultimate (TressFX) 22.4fps 29.8fps 33%

Tomb Raider, Ultra 39.5fps 50.3fps 27.3%

Tomb Raider, High 56.8fps 69.6fps 22.5%

Hitman Absolution, Ultra 37.2fps 45.2fps 21.7%

Hitman Absolution, High 44.1fps 52.6fps 19.3%

Sleeping Dogs, Extreme 20.3fps 26.2fps 29%

Sleeping Dogs, High 40.2fps 50.9fps 26.6%

Metro: Last Light, High 25.5fps 30.0fps 17.6%

The results pretty much confirm the theory that more compute cores in the GCN architecture doesn't result in a linear scaling of performance. That's why AMD tends to increase core clock and memory speed on its higher-end cards, because it's clear that available core count own won't do the job alone. Looking at the results, Metro: Last Light shows the least difference - the extra 50 per cent of compute power producing just 17.6 per cent more performance. On the flipside, Tomb Raider hands in the most noticeable gains - up to 33 per cent once the insane TressFX hair simulation is engaged, but with a handsome boost to performance across a brace of additional quality settings even without it.

Game focus: Crysis 3

So the next question is to what extent multi-platform developers may need to compromise on their Xbox One versions in order to match PlayStation 4. There are numerous routes open here. Virtually all next-gen multi-platform titles will have PC versions, therefore the engines are already built with scalability in mind - to the point where the lowest common denominator won't hold a candle to the performance of the Xbox One, let alone the PS4. The tech teams can easily dial back quality settings in order to claw back performance - be it for the Microsoft console or a much less powerful PC - or they can maintain parity between XO and PS4 in terms of features and simply scale back resolution. We decided to put this to the test by utilising the closest thing we have to a next-gen game - the forward-looking, technologically brilliant Crysis 3. Most of the game benchmarks above (Metro: Last Light aside) are based on scaled up console games. What makes Crysis 3 different is that it was built for PC primarily, then scaled down to run on current-gen console - exactly like many of the games we'll be playing in Q4 this year.

In this video we compare performance of our "target" hardware running at 1080p, then scale down the Xbox One equivalent first to 1776x1000 (a resolution drop of 17.2 per cent). After that, we scale down again to 1600x900 - a drop of 33.3 per cent, and a linear downscale in line with the GPU power deficit that Xbox One has in comparison to the PlayStation 4. So in theory we should see the former sit between the two target platforms, and the latter bringing our XO target into line with our PS4 surrogate. However, if you look at the video, this isn't what happens.

Dropping to 1600x900 actually sees the target Xbox One set-up move significantly ahead of the PS4 equivalent running at 1080p, while the 1776x1000 stream lags just a touch behind - take a look further down the page to see the impact this downscaling makes to image quality. Also, we find that despite having a 50 per cent boost in computational power, average frame-rates on our PS4 equivalent are only 19.3 per cent ahead of our "Xbox One" (42.6fps vs. 35.7fps). It may well be the case that system resources consumed by the advanced shader work don't scale in a linear manner according to resolution, meaning that fewer pixels to service frees up more system resources than you might think. We don't know for sure, and clearly this is just one game - as the benchmarks above demonstrate, different titles on different quality settings will see different results. Another factor to bear in mind is the 30fps frame-rate cap on most console titles - it's a great performance leveller and generally speaking, it tends to favour the weaker platform, which is only unduly affected on the most technologically demanding scenes.

But of course, PlayStation 4's strengths aren't just limited to its surfeit of graphics processing power - there's its bandwidth advantage too. It has a 256-bit memory bus allowing for a peak throughput of 176GB/s from its GDDR5. In contrast, Xbox One's DDR3 memory is limited to 68GB/s, with the slack taken up by its 32MB of embedded static RAM (ESRAM) built into the processor with peak theoretical bandwidth of 192GB/s.

The bandwidth question

Even if the additional rendering power of the PS4's graphics chip isn't quite as pronounced as the raw numbers suggest, lack of bandwidth can be an absolute killer to performance - and that's a big worry for the Xbox One architecture. You can see this by checking out the following video, where we compare the Radeon HD 7790 with the Radeon HD 7850. What's interesting about this comparison is that while the HD 7790 has fewer compute units than the HD 7850 (14 vs. 16), it's clocked higher, to the point where the raw compute potential of both is virtually identical at around 1.8 teraflops - PS4 territory. The difference comes down to bandwidth. The 256-bit memory bus of the HD 7850 offers a massive 60 per cent advantage over the 128-bit HD 7790 interface.

Three games are covered here: Crysis 3, Skyrim and Battlefield 3. The first two games reveal anything up to a 20 per cent performance improvement owing to the additional bandwidth available, but DICE's BF3 reflects the parity in compute power, offering virtually identical performance, suggesting that sans MSAA, the tech isn't hugely reliant on bandwidth. Transplanting those findings across to the next-gen consoles, developers for the Microsoft console have their work cut-out in utilising the DDR3 and ESRAM effectively in matching the sheer throughput of the PS4's memory bus. Getting good performance from the ESRAM is key in ensuring that Xbox One is competitive with the PS4.

Conclusion: the PS4 advantage and the Xbox One challenge

In summary, the PS4 enjoys two key strengths over the Xbox One in terms of its rendering prowess: raw GPU power and masses of bandwidth. On the face of it, the specs look like a wash, but it seems clear that one of those advantages - the 50 per cent increase in compute power - doesn't result in the stratospheric boost to performance you might imagine. Clearly the PS4 is more powerful, but the evidence suggests that quality tweaks and/or resolution changes could help produce level frame-rates on both platforms running the same games. Bandwidth remains the big issue - the PS4's 256-bit bus is established technology, and easy to utilise. Xbox One's ESRAM is the big unknown, specifically in terms of how fast it actually is and the speed at which developers are able to make the most of it. In our benchmarks and game testing we gave the Xbox One target the benefit of the doubt by equalising bandwidth levels, but clearly this is in no way guaranteed.

Clearly, the next-gen battle is shaping up to be a fascinating contest. What we're looking at are two consoles designed from the same building blocks but with two entirely different approaches in mind. According to inside sources at Microsoft, the focus with Xbox One was to extract as much performance as possible from the graphics chip's ALUs. It may well be the case that 12 compute units was chosen as the most balanced set-up to match the Jaguar CPU architecture. Our source says that the make-up of the Xbox One's bespoke audio and "data move engine" tech is derived from profiling the most advanced Xbox 360 games, with their designs implemented in order to address the most common bottlenecks. In contrast, despite its undoubted advantages - especially in terms of raw power, PlayStation 4 looks a little unbalanced by comparison. And perhaps that's why the Sony team led by Mark Cerny set about redesigning and beefing up the GPU compute pipeline - they would have seen the unused ALU resources and realised that there was an opportunity here to transform that into an opportunity for developers to do something different with the graphics hardware.

Cerny himself admits that utilisation of GPU compute isn't likely to come into its own until year three or year four of the PS4's lifecycle. One development source working directly with the hardware, told us that "GPU compute is the new SPU" in reference to the difficulty coders had in accessing the power of the PS3's Cell processor, but also in terms of the potential of the hardware. There's a sense that this is uncharted territory, that it's an aspect of the graphics tech that's going to give the system a long tail in terms of extracting its full potential. But equally, this isn't going to happen overnight and almost certainly not in the launch period. That being the case, as unlikely as this may sound bearing in mind the computational deficit in its graphics hardware, theoretically Xbox One multi-platform games have a pretty good shout in getting close to their PS4 equivalents, with only minor compromises. Further into the lifecycle it becomes a question of whether PS4 GPU compute becomes a significant factor in development when the additional effort would not yield much in the way of dividends for the Xbox One version of the game.